The EM algorithm (Expectation-Maximization Algorithm) is an iterative algorithm for estimating the parameters of statistical models that contain latent (hidden) variables in the observed data.

For example, consider the age distribution of fitness club members. There may be two groups: people in their 20s who come for strength training and people in their 50s who come for metabolic health management, each with age distributions following a normal distribution. In this case, which group each member belongs to is a “latent variable” that cannot be directly observed.

The basic idea of the EM algorithm is to simultaneously estimate both the model parameters and the latent variables by alternating between the following two steps:

- E-step (Expectation Step): Using the current model parameters, estimate the expected values (or probability distribution) of the latent variables from the observed data. In the above example, this involves computing the “responsibility” indicating which group each member belongs to.

- M-step (Maximization Step): Using the expected values of the latent variables estimated in the E-step, maximize the model parameters. In the above example, this involves updating the parameters such as the mean and variance of each group’s normal distribution, using the responsibilities as weights.

By repeating this process, the estimates of the model parameters and latent variables gradually improve, and the log-likelihood eventually converges to a local maximum.

Gaussian Mixture Models and the EM Algorithm

The EM algorithm is commonly used for parameter estimation in Gaussian Mixture Models (GMM), which assume that observed data is generated from a superposition of multiple Gaussian distributions.

The Q Function

In the EM algorithm, the hypothetical situation where both the observed data \(x\) and the latent variables \(z\) are known is called “complete data.” We consider the log-likelihood of the joint distribution \(p(x, z | \theta)\) of the complete data.

However, in practice the latent variables \(z\) are unknown. Therefore, we compute the posterior distribution \(p(z|x, \hat{\theta})\) of the latent variables \(z\) using the current parameter estimate \(\hat{\theta}\), and then compute the expected value of the complete data log-likelihood with respect to this distribution. This is the Q function.

\[ Q(\theta, \hat{\theta}) = \mathbb{E}\_{p(z|x,\hat{\theta})}[\log p(x,z|\theta)] = \int p(z|x,\hat{\theta})\log p(x,z|\theta)dz \]In the M-step of the EM algorithm, we find the parameter \(\theta\) that maximizes this Q function.

Parameter Update Formulas for GMM via the EM Algorithm

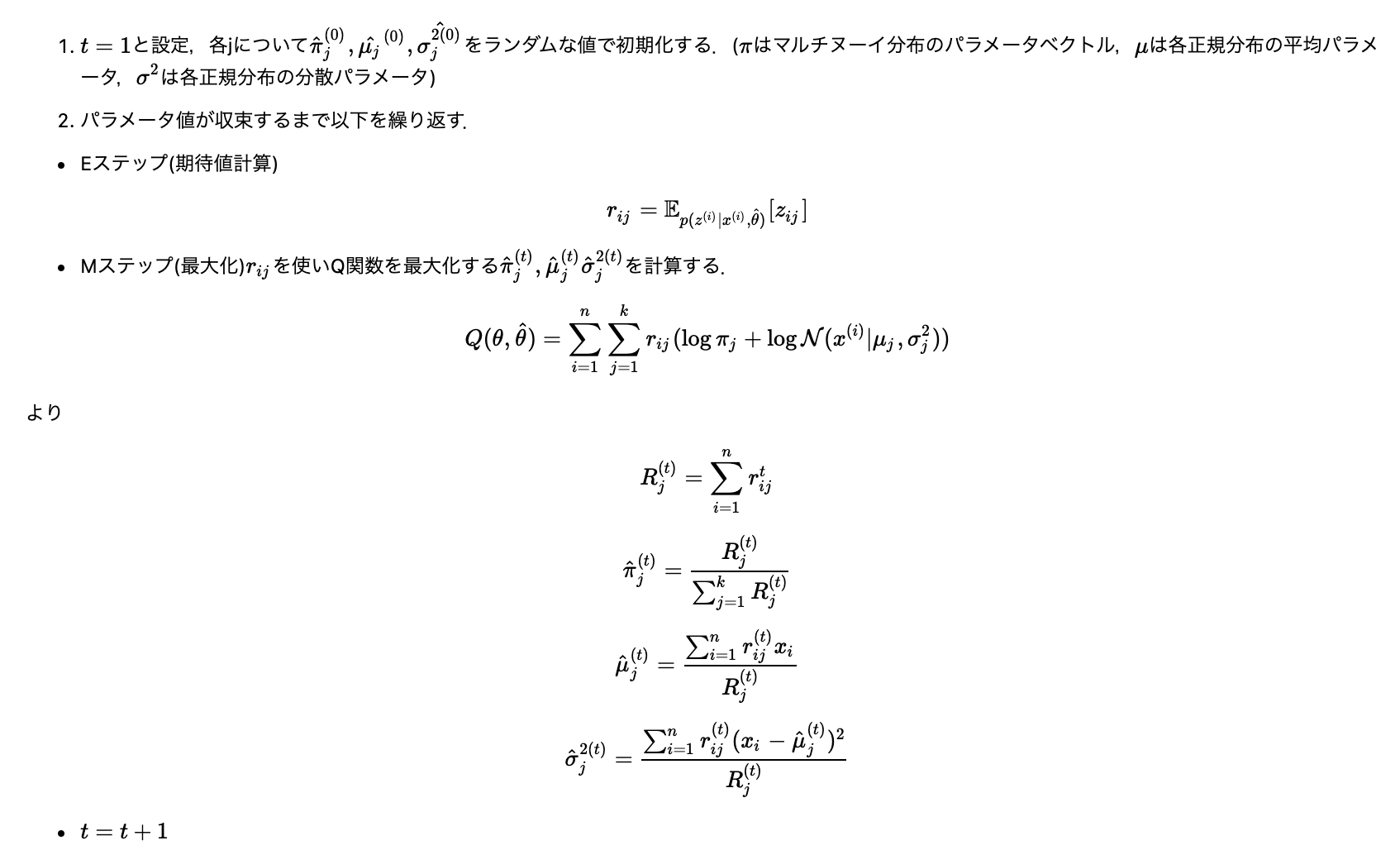

The update formulas for estimating the parameters of a Gaussian mixture model (weights \(\pi_j\), means \(\mu_j\), variances \(\sigma_j^2\) of each Gaussian) using the EM algorithm are as follows:

- Initialization: Initialize the parameters \(\hat{\pi}_j^{(0)}, \hat{\mu}_j^{(0)}, \hat{\sigma}_j^{2(0)}\) of each Gaussian with random values.

- E-step: Compute the “responsibility” \(r_{ij}\) indicating which Gaussian generated each data point \(x_i\). \( r*{ij} = p(z*{ij}=1 | x*i, \hat{\theta}^{(t)}) = \frac{\hat{\pi}\_j^{(t)} \mathcal{N}(x_i | \hat{\mu}\_j^{(t)}, \hat{\sigma}\_j^{2(t)})}{\sum*{k=1}^K \hat{\pi}_k^{(t)} \mathcal{N}(x_i | \hat{\mu}\_k^{(t)}, \hat{\sigma}\_k^{2(t)})} \) Here, \(z_{ij}=1\) indicates that data point \(x_i\) belongs to the \(j\)-th Gaussian.

- M-step: Using the responsibilities \(r_{ij}\) computed in the E-step, calculate the new parameters \(\hat{\theta}^{(t+1)}\). \( N*j = \sum*{i=1}^N r*{ij} \) \( \hat{\pi}\_j^{(t+1)} = \frac{N_j}{N} \) \( \hat{\mu}\_j^{(t+1)} = \frac{1}{N_j} \sum*{i=1}^N r*{ij} x_i \) \( \hat{\sigma}\_j^{2(t+1)} = \frac{1}{N_j} \sum*{i=1}^N r\_{ij} (x_i - \hat{\mu}\_j^{(t+1)})^2 \)

- Convergence check: Repeat steps 2 and 3 until the parameter changes become sufficiently small or the maximum number of iterations is reached.

References

- Taro Tezuka, “Understanding Bayesian Statistics and Machine Learning,” Kodansha (2017)